Social media platforms are an integral part of modern-day living. They enable us to interact with family and friends, access our favorite entertainment, trade, and even acquire information. But for all the good that they afford us, they have a vile face to them. Unsavory posts and comments are a common feature here; we need action to stem that tide.

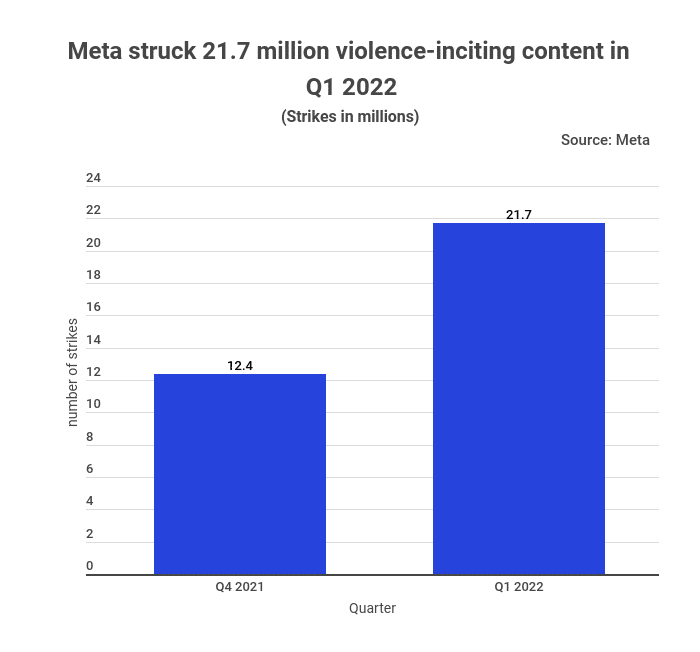

Meta, Facebook’s (FB) parent company, has taken the cue and is redoubling its efforts in checking inflammatory posts. According to a TradingPlatforms.com analysis, Meta struck 21.7 million violence-inciting posts and comments in Q1 2022. That’s a 175% jump from the similar actions it took in Q4 2021.

Is Meta seeking to gag its users?

That revelation has caught the attention of TradingPlatforms’ Edith Reads. She holds, “Meta owes its 2.8Bmonthly FB users the responsibility of ensuring they have wholesome interactions on it. Its actions are an admission of FB’s potency as a communication medium. It’s an acceptance of its importance in reining any content urging violent and illegal activity.”

Edith sees Meta’s actions as ensuring decorum in how people express themselves, not gagging its users. The firm says it has also heightened its crackdown on similar content on Instagram. It reports that in Q1 2022, it acted on 2.7M posts that it deemed were goading violent activity. That figure was a marginal rise from the 2.6M incidents it contained in Q4 2021.

FB has advanced its detection capabilities

Facebook has been facing accusations of its ineffectiveness in curtailing hate-mongering and misinformation. The two are key ingredients of discrimination and violence targeting certain persons. These accusations have come to the limelight following Frances Haugen’s leaking of FB’s internal communication on the matter.

The Haugen leaks are a stinging indictment of FB’s ineptitude in stemming posts whipping up violent acts. They portray the social media giant as lacking the staff and local language expertise to flag incendiary content. FB’s artificial intelligence (AI) systems are no better either. According to the leaks, those AI tools lack algorithms for effectively screening some native tongues.

Following the expose, Meta has moved to assure its users of its commitment to upholding ethical postings on its platforms. It claims to have improved at timely detecting and nipping hateful content. It says that it has achieved that by adopting an expanded and proactive system. This system has enabled it to neuter 98% of malicious content before users report it.

FB is no stranger to controversy

Controversy seems to be FB’s second nature. A UN investigation into the ethnic cleansing of Myanmar’s Rohingya Muslims linked FB to the spreading of hate against them. The Haugen documents have firmed that up. They show FB lacked classifiers for flagging disinformation and hate-mongering in Burmese, Oromo or Amharic.

News outlet Reuters claims that it discovered posts in Amharic, a common language in Ethiopia, terming some ethnic groups as enemies and calling death on them. The Ethiopian government has been engaging forces from the want-away Tigray region in a year-long conflict. That fighting has killed thousands while displacing over two million others.

The firm has also hit the headlines recently after a live streaming of a racist-inspired shooting in Buffalo, NY. Many have criticized FB for taking too long to pull the content. According to the Washington Post, it took FB over ten hours to remove the footage. Consequently, it received 46,000 shares.

Question & Answers (0)